Too many IT teams run index rebuild jobs on autopilot. Every night. Every index. No questions asked.

Here’s the truth: if you’re doing this daily on indexes smaller than 10,000 pages, you might be chewing up CPU, bloating your logs, and annoying your users for zero gain.

Let’s fix that.

Internal vs. External fragmentation explained

SQL Server tracks two kinds of fragmentation:

- Internal = too much free space inside data pages

- External = out-of-order pages on disk. The logical sequence doesn’t match physical placement

Think:

- Internal = wasted space

- External = wasted reads

Does External Fragmentation Even Matter on SSDs?

Not really*.

On spinning disks, scattered reads could mean lots of tiny movements by the drive head = slower performance.

But on SSDs? Everything is basically a memory chip, so page order has minimal impact on read speed. Yet SQL Server still reports external fragmentation like it’s 2008.

That’s why blindly reacting to fragmentation numbers is often misleading.

* Not to be confused with internal

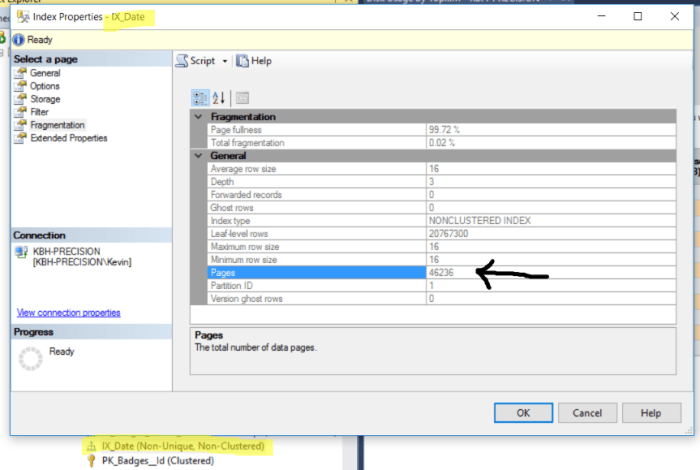

Don’t Worry about % – look at the page counts

My rule of thumb? If it’s under 10,000 pages, skip it.

You won’t see a meaningful performance benefit, and you’re just burning maintenance time and log space.

Microsoft’s default page count for index maintenance is 1000 8K pages. 8K x1000 = approx. 8MB. That is tiny.

Rebuild vs. Reorganize

Rebuild = drops and recreates the index (resource-heavy, spikes log use, can block unless ONLINE)

Reorganize = shuffles leaf-level pages gently (lighter, but less effective)

Here’s a smarter guideline than just looking at fragmentation %:

Don’t overlook statistics! They matter more than fragmentation in most cases.

SQL Server uses statistics to estimate how many rows a query will return. These estimates help the optimizer decide whether to use an index, do a table scan, join methods, and more.

If the stats are outdated or inaccurate, SQL Server can choose a bad query plan, which can lead to slow performance, excessive reads, or blocked processes.

That’s why I recommend updating stats daily in most transactional systems. It has a much higher ROI than blindly rebuilding indexes.

I usually let SQL Server start with its default sampling algorithm, then adjust if needed, especially if your data distribution is skewed or volatile.

Recommended Tools for Smarter Index Maintenance

Use tools that let you filter by both fragmentation level and page count, like:

- Ola Hallengren’s IndexOptimize (this can be tweaked to do Stats Only)

These give you control rather than nightly sledgehammer.

The Bottom Line

Stop defaulting to nightly index rebuilds.

If you’re on SSDs and under 10K pages, you’re solving a problem that doesn’t exist — and possibly creating new ones (like log growth and blocking).

Be strategic:

- Focus on meaningful fragmentation

- Consider index size before acting

- Separate stats updates from index maintenance

Smarter maintenance = better performance with fewer headaches.

Database Emergencies Cost Thousands Per Minute

When SQL Server fails, you need expert help fast.

For a limited time: First month of Pocket DBA® FREE with a 6-month plan.

- Senior DBAs available 24/7 • 1-hour SLA • Proactive problem prevention

Don’t wait for a disaster. Be prepared.

CLAIM FREE MONTH

Offer ends June 1st. Limited availability.

Thanks for reading!

–Kevin